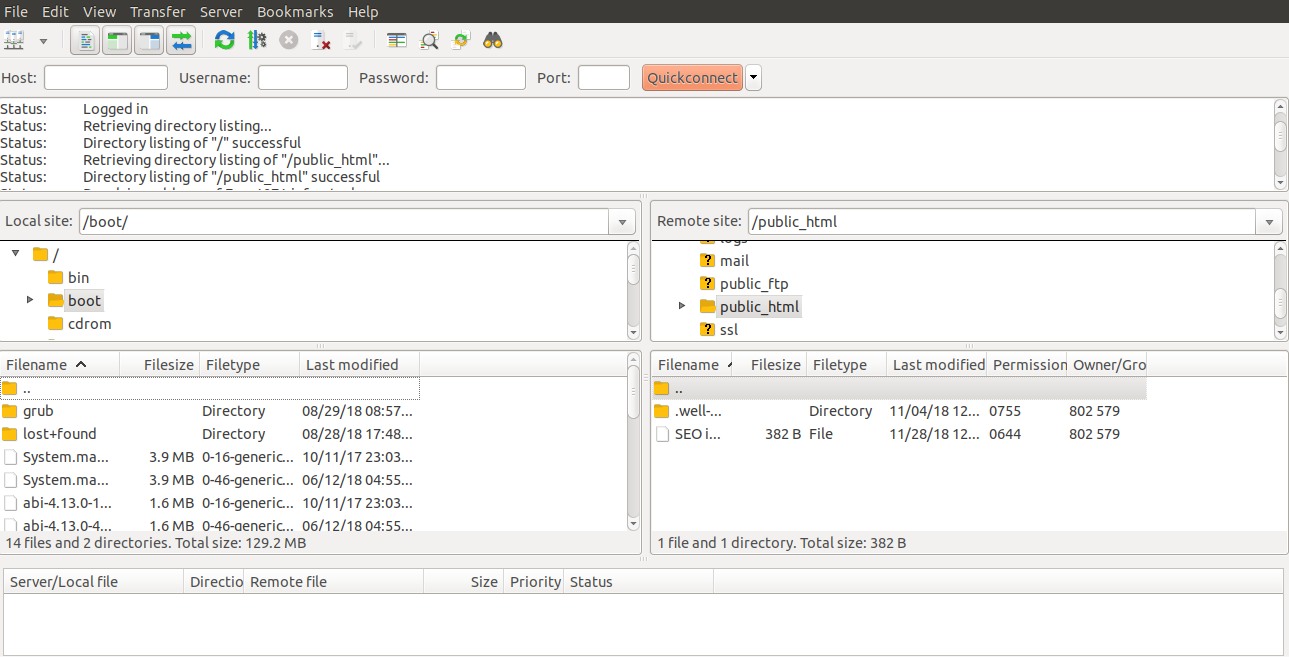

Processed file uploads for recommendations or events. For example, price drop, back in stock, and so on.īackend processing errors that might occur during user processing.Īny events that are sent to Blueshift using file upload or the API. The user_updates sub-folder contains logs for user updates made using APIs.īlueshift generated derived events. Sub-folder click_reports: click reports by date.Īny user import files that are processed. If all catalogs are exported, the filename is export_catalog_.csv.Ĭontains catalog file imports and error reports (in sub-folder).īlueshift generated events like price_change, back_in_stock, and so on.Ĭontains the click reports for creatives. The catalog is exported in CSV format and the filename is _.csv. Please use automatic_campaign_reports going forward.Ĭontains execution statistics, rendered templates for debugging/test campaigns, precomputed campaign segments, holdout users for automatic winner selection, data to be exported for segment reports and so on. The data is batched and written to the folder every 5 mins.ĭaily exports of statistics at a campaign level. Blueshift captures and archives all campaign activity (sends, clicks, opens, delivered, and so on) to your S3 bucket. Error data for any errors that occur when you access this folder using webhooks is also stored in a sub-folder. This data can be accessed by using webhooks. Note: Contact to enable automatic campaign reports for your account.Ĭampaign activity data. Generated once a day.ĭaily campaign detail summary statistics at trigger or experiment level. The following table lists the various folders and the information that is available in the folders in the S3 bucket. Some examples of open source FTP clients include Cyberduck, Transmit, FileZilla, WinSCP. You can use an FTP/SFTP client to access the S3 folders. The account Admin role can access the S3 folder credentials from the Account settings page. You can find the details for the S3 bucket and the credentials in the Account Settings > API keys tab.

This might seem like a lot of steps, but the CloudWatch Event and Lambda function are trivial to configure.If you don't want to use the S3 bucket provided by Blueshift and want to export campaign activity and automatic campaign reports to your own Amazon S3 bucket, contact to set up data replication. At the end of the process, tell the operating system to shutdown ( sudo shutdown now -h).The Amazon EC2 instance can use a startup script (see details below) that will run your process.The AWS Lambda function can call StartInstances() to start a stopped EC2 instance.

Create an Amazon CloudWatch Event rule that triggers an AWS Lambda function.If you are using an Amazon EC2 instance, you could save money by turning off the instance when it is not required. The script could be triggered via a schedule (cron on Linux or a Scheduled Task on Windows). It has a aws s3 cp command to copy files, or depending upon what needs to be copied it might be easier to use the aws s3 sync command that automatically copies new or modified files. The simplest way to upload to Amazon S3 is to use the AWS Command-Line Interface (CLI). If this is not possible, then you could run the script on any computer on the Internet, such as your own computer or an Amazon EC2 instance.

FILEZILLA TO S3 DOWNLOAD

It would be best to run such a script from the FTP server itself, so that the data can be sent to S3 without having to download from the FTP server first. Retrieve the data from the FTP server, and.Therefore, you will need a script or program that will: It cannot "pull" data from an external location.

0 kommentar(er)

0 kommentar(er)